Far more and additional merchandise and expert services are using advantage of the modeling and prediction capabilities of AI. This short article offers the nvidia-docker software for integrating AI (Synthetic Intelligence) software program bricks into a microservice architecture. The key edge explored listed here is the use of the host system’s GPU (Graphical Processing Unit) resources to speed up many containerized AI purposes.

To recognize the usefulness of nvidia-docker, we will start out by describing what sort of AI can profit from GPU acceleration. Secondly we will present how to put into action the nvidia-docker instrument. Lastly, we will explain what applications are out there to use GPU acceleration in your applications and how to use them.

Why employing GPUs in AI applications?

In the industry of synthetic intelligence, we have two principal subfields that are utilised: machine mastering and deep discovering. The latter is section of a greater loved ones of equipment studying methods based on artificial neural networks.

In the context of deep discovering, where by operations are essentially matrix multiplications, GPUs are a lot more effective than CPUs (Central Processing Units). This is why the use of GPUs has developed in current years. Certainly, GPUs are deemed as the heart of deep studying since of their massively parallel architecture.

However, GPUs cannot execute just any program. In fact, they use a specific language (CUDA for NVIDIA) to consider advantage of their architecture. So, how to use and talk with GPUs from your purposes?

The NVIDIA CUDA technological innovation

NVIDIA CUDA (Compute Unified Product Architecture) is a parallel computing architecture put together with an API for programming GPUs. CUDA interprets software code into an instruction set that GPUs can execute.

A CUDA SDK and libraries these kinds of as cuBLAS (Primary Linear Algebra Subroutines) and cuDNN (Deep Neural Network) have been produced to talk effortlessly and effectively with a GPU. CUDA is readily available in C, C++ and Fortran. There are wrappers for other languages such as Java, Python and R. For illustration, deep studying libraries like TensorFlow and Keras are centered on these technologies.

Why making use of nvidia-docker?

Nvidia-docker addresses the needs of developers who want to increase AI performance to their purposes, containerize them and deploy them on servers powered by NVIDIA GPUs.

The goal is to set up an architecture that will allow the progress and deployment of deep studying versions in expert services offered through an API. Consequently, the utilization fee of GPU means is optimized by producing them obtainable to several software circumstances.

In addition, we gain from the benefits of containerized environments:

- Isolation of circumstances of each and every AI design.

- Colocation of various products with their unique dependencies.

- Colocation of the similar product less than several variations.

- Reliable deployment of designs.

- Design overall performance monitoring.

Natively, applying a GPU in a container calls for installing CUDA in the container and giving privileges to obtain the device. With this in mind, the nvidia-docker software has been made, permitting NVIDIA GPU equipment to be uncovered in containers in an isolated and secure fashion.

At the time of composing this report, the most recent edition of nvidia-docker is v2. This version differs drastically from v1 in the next techniques:

- Model 1: Nvidia-docker is executed as an overlay to Docker. That is, to make the container you experienced to use nvidia-docker (Ex:

nvidia-docker run ...) which performs the steps (among the others the development of volumes) making it possible for to see the GPU units in the container. - Variation 2: The deployment is simplified with the substitute of Docker volumes by the use of Docker runtimes. Indeed, to launch a container, it is now essential to use the NVIDIA runtime via Docker (Ex:

docker operate --runtime nvidia ...)

Take note that thanks to their unique architecture, the two variations are not compatible. An software published in v1 need to be rewritten for v2.

Location up nvidia-docker

The required elements to use nvidia-docker are:

- A container runtime.

- An out there GPU.

- The NVIDIA Container Toolkit (primary section of nvidia-docker).

Stipulations

Docker

A container runtime is necessary to operate the NVIDIA Container Toolkit. Docker is the proposed runtime, but Podman and containerd are also supported.

The official documentation provides the set up technique of Docker.

Driver NVIDIA

Drivers are necessary to use a GPU machine. In the circumstance of NVIDIA GPUs, the drivers corresponding to a specified OS can be acquired from the NVIDIA driver obtain page, by filling in the information and facts on the GPU design.

The set up of the drivers is completed by way of the executable. For Linux, use the next instructions by changing the name of the downloaded file:

chmod +x NVIDIA-Linux-x86_64-470.94.run

./NVIDIA-Linux-x86_64-470.94.runReboot the host device at the stop of the set up to consider into account the installed motorists.

Installing nvidia-docker

Nvidia-docker is offered on the GitHub venture site. To install it, stick to the installation manual relying on your server and architecture particulars.

We now have an infrastructure that makes it possible for us to have isolated environments offering access to GPU resources. To use GPU acceleration in applications, a number of applications have been created by NVIDIA (non-exhaustive record):

- CUDA Toolkit: a set of instruments for producing application/courses that can conduct computations utilizing the two CPU, RAM, and GPU. It can be utilized on x86, Arm and Energy platforms.

- NVIDIA cuDNN](https://developer.nvidia.com/cudnn): a library of primitives to speed up deep studying networks and enhance GPU performance for main frameworks these as Tensorflow and Keras.

- NVIDIA cuBLAS: a library of GPU accelerated linear algebra subroutines.

By applying these applications in software code, AI and linear algebra jobs are accelerated. With the GPUs now visible, the software is capable to send the data and operations to be processed on the GPU.

The CUDA Toolkit is the cheapest amount choice. It offers the most command (memory and guidelines) to build personalized purposes. Libraries provide an abstraction of CUDA functionality. They make it possible for you to target on the application improvement somewhat than the CUDA implementation.

The moment all these components are executed, the architecture employing the nvidia-docker company is ready to use.

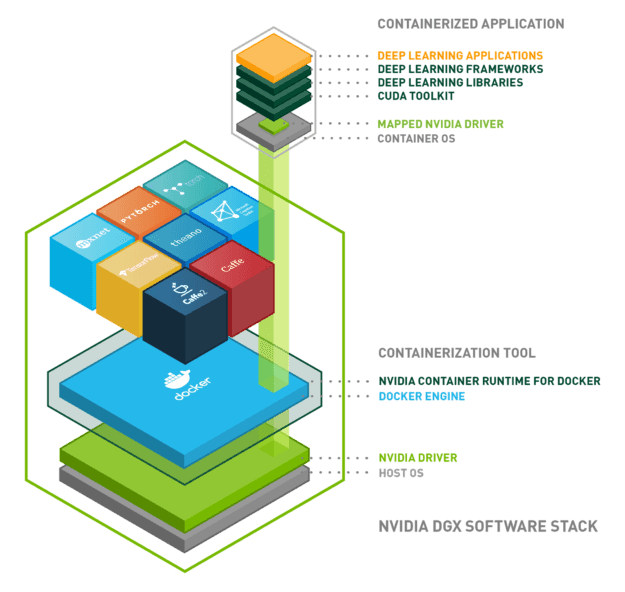

Here is a diagram to summarize almost everything we have witnessed:

Summary

We have established up an architecture making it possible for the use of GPU assets from our apps in isolated environments. To summarize, the architecture is composed of the pursuing bricks:

- Running procedure: Linux, Windows …

- Docker: isolation of the surroundings applying Linux containers

- NVIDIA driver: installation of the driver for the hardware in question

- NVIDIA container runtime: orchestration of the earlier a few

- Applications on Docker container:

- CUDA

- cuDNN

- cuBLAS

- Tensorflow/Keras

NVIDIA proceeds to develop instruments and libraries close to AI systems, with the target of setting up itself as a chief. Other technologies may well complement nvidia-docker or may be extra acceptable than nvidia-docker relying on the use situation.